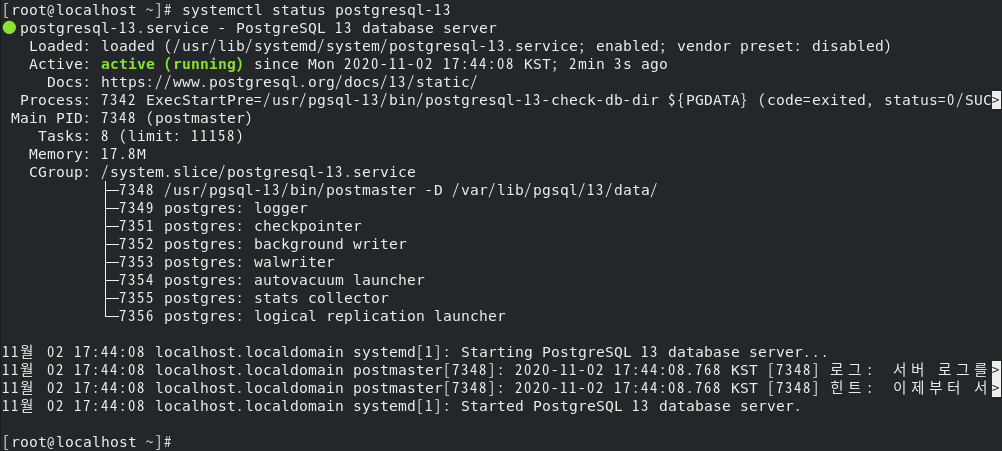

Many times, a further closer investigation reveals that there was a long-running transaction or a bulk data loading and causing the generation of spill files. Single-core saturation was the commonly reported case. A closer inspection shows the WAL Sender is consuming a lot of CPU. It was almost a regular complaint from many users that they keep seeing huge replication lags. On the other hand, if the changes are very small and if there are too many small changes, it will be spilled to disk if the transaction is lengthy, causing IO overheads. This can even affect the host machine’s stability and the chance of OOM kicks-in. First, if each change is really big and if there are sub-transactions, the memory consumption can easily run into several GBs. If there is a very lengthy transaction, the rest of the changes will be spilled to disk as spill files. PostgreSQL used to keep only 4096 changes (max_changes_in_memory) for each transaction in memory. I want to let them know that there are a lot more exciting new features related to logical replication/decoding in new versions like PostgreSQL 13 and 14.īefore getting into new features, let us look at what else was hurting in logical replication in older PostgreSQL versions.

#Postgresql 13 Patch#

Thanks to the Patroni community for addressing this problem in the most magnificent way: No patch to PostgreSQL, no Extensions required! Completely non-invasive solution.Īs the biggest road-block/deterrent is gone, we expect more and more users to start looking into OR re-considering the logical replication, especially those who discarded it due to practical difficulties. Even while I am writing this post, I could see customers/users who don’t have Patroni struggling to address this. In fact, nothing else was hurting a logical replication as much as this problem. I recently blogged about how Patroni Addresses the Problem of the Logical Replication Slot Failover in a PostgreSQL Cluster.

0 kommentar(er)

0 kommentar(er)